Looking for good crawler and indexing settings for your Blogspot or Blogger site? You’re in the right place!

In this guide, I will show you the best settings for crawler and indexing on Blogger to accelerate your website’s ranking.

Given the absence of SEO plugins in Blogger, it’s crucial to leverage all available options to speed up the indexing and ranking of your website on search engines like Google and Bing.

Setting up the correct settings for crawlers and indexing in Blogger is crucial for search engine optimization (SEO). The best settings will enable your website to rank more quickly.

If you do not know anything about Crawling and Indexing, then you should get a rough idea about it first from the below paragraphs and then move to Blogger Crawlers and Indexing settings.

What is a Crawler?

“Crawling” is a term used in SEO to describe the process where search engines send out bots, also known as crawlers or spiders, to discover new and updated content on the web.

Crawlers, also known as spiders or bots, are run by search engines like Google and Bing. They discover and scan webpages, then index them in their databases.

When you post a query on the search bar, the web crawlers collect information from a large number of site pages and sort it out in the Search Engine Result Page (SERP).

The main objective of these crawlers is to learn and categorize the topics and contents on the webpage so that they can be retrieved whenever someone searches for them.

What is indexing?

Once these crawlers have found the content, the next step is “indexing”. This is where the search engines store the information they’ve found in a massive database.

This database is then used to serve up relevant results when someone makes a search. It’s similar to a librarian cataloging a new book so that it can be easily found by readers.

Indexing is the process where all the content collected through crawling is stored and organized in the database. Once the page is indexed, it becomes part of the search engine results.

Hence, indexing your website is crucial; without it, your site won’t appear in the search results.

![How Google Search Works via Crawling, Indexing and Ranking [Infographic] How Google Search Works via Crawling, Indexing and Ranking [Infographic]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEin-WeKKAR08nQuVwx8cuvlAmXZlshSFQ5vmt8C8wuxw3QI4mjCuAPE9X7l1OxPGXqJgkTvz1dybq_RiLp0W5gwHDlAslLDjDOvnhLwWzaHmkNcHcnVftKOaw5WtdienoiMwYPqavKrFKoWM_dboXTRIcwdJ_989k_PQskgS-s7RhBk16CBN2TeLOvA/s16000-rw/how-google-search-works.png)

Crawlers and indexing settings on Blogger

In Blogger, Crawlers and indexing status settings remain untouched by default.

It doesn't mean your blog won't get indexed, but it will take relatively more time to index your blog posts. Because Crawlers will crawl everything on your website including unnecessary pages like archived pages.

So, you have to enable the Crawlers and indexing settings and customize it. It will help the Crawler to understand which posts and pages to crawl, and your posts will get indexed much faster.

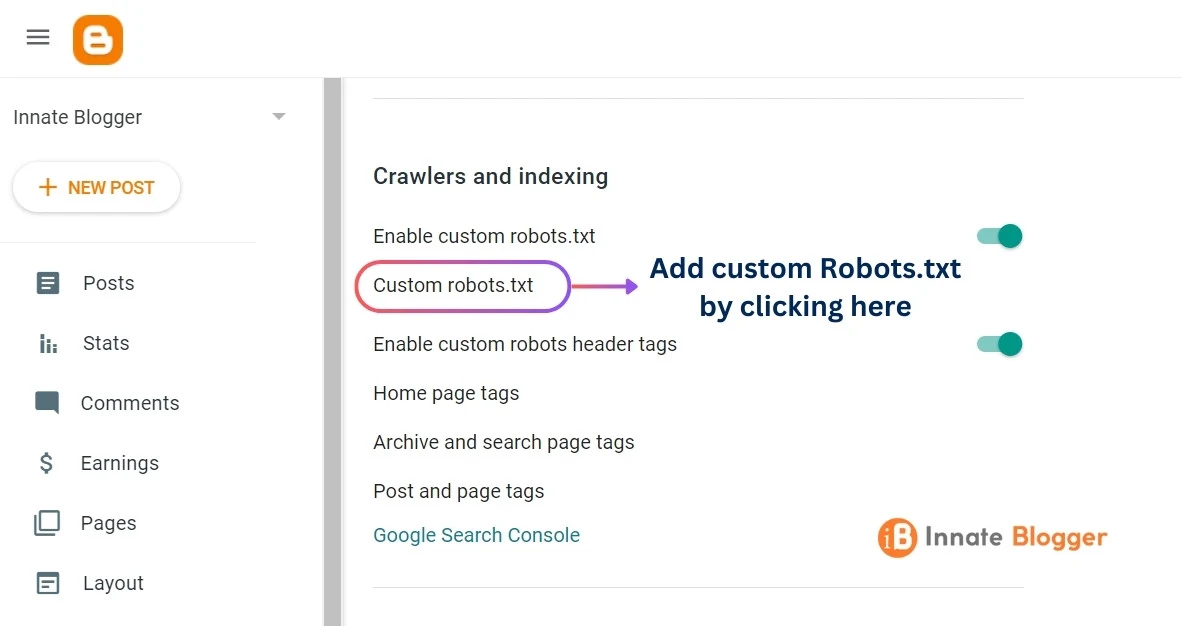

Custom Robots.txt

Log in to the Blogger dashboard, and then go to the blog settings, and then scroll down to the Crawlers and indexing settings. First, you need to enable the custom robots.txt option.

A robots.txt file informs the web crawler which posts or pages it can request and which pages are not required to crawl. It also helps your website to avoid too many bots requests.

Now, you have to add custom robots.txt, for that you have to first generate a robots.txt file. You can simply copy the example given below, and paste it after changing the domain name.

User-agent: *

Disallow: /search

Allow: /

Sitemap: https://www.example.com/sitemap.xml

Sitemap: https://www.example.com/sitemap-pages.xml

Note: Disallowing /search will stop the crawlers like Google bot from

crawling your labels pages. This can save crawl buget for new websites.

Custom robots header tags

Once you enable the option for custom robots header tags, you will see that Home page tags, Archive and Search page tags, and Post and Page tags options are available. Let me just explain the meanings of those tags.

- all: it means there aren't any restrictions for crawlers to crawl or index any page.

- noindex: if this option is selected for any page, then the crawler can't crawl or index that page.

- nofollow: blog posts contain both internal and external links. Crawlers automatically crawl all the links on any webpage, but if you don't want crawlers to crawl any links on any page, then you have to enable this option.

- none: it means both "noindex" and "nofollow" at once.

- noarchive: it will remove any cached link from search.

- nosnippet: it will stop search engines to show snippets of your web pages.

- noodp: it will remove titles and snippets from open directory projects like DMOZ.

- notranslate: it will stop crawlers to show translation on pages.

- noimageindex: in this case, your post will be crawled and indexed, but all the images will not be indexed.

- unavailable_after: this option helps to noindex any post after a particular time.

Now that you know all details about the robot tags, you can decide for yourself which one to choose. By the way, here are the best settings for any normal blog or website. Only enable these tags category-wise.

-

Custom robot tags for the home page: all, noodp

-

Custom robot tags for archive and search pages: noindex, noodp

-

Custom robot tags for posts and pages: all, noodp

Manage custom robot tags for each Blogger post

After you change the Crawlers and indexing settings, custom robot tags will also be available in post settings, at the bottom right-hand side of the post writing dashboard. So, you can easily control settings for each post.

By configuring these settings, you'll help crawlers understand which posts and pages to crawl, leading to faster indexing of your blog posts. Remember that enabling the right settings is essential for effective SEO!

Feel free to customize these settings based on your specific needs.

Conclusion

Crawlers and indexing settings can play a major role in blog posts SEO, but if you do it wrong, it can also remove your posts completely from Search results. So, you need to do it properly.

Remember that proper settings for crawlers and indexing are crucial for search engine optimization (SEO). By following these guidelines, your Blogger website will have a better chance of ranking well in search results!

If you still have any questions, don't forget to comment.